Real-World Experiences with NetApp Cloud Data Services – Part 2: Azure NetApp Files

Ryan Beaty

This is part 2 of my blog about NetApp Cloud Data Services. If you are interested in what Cloud Volumes ONTAP (CVO) can do for you, read my other blog. For this blog, I’ll focus on my experience with Azure NetApp Files (ANF).

ANF is basically Azure's flavor of Cloud Volumes Service (CVS). Think of it as being equivalent to a NetApp volume. You basically just take a volume in Azure to use via NFS or SMB. You don't manage the NetApp, and you don't log into it. It's just a volume that's being shared out to you. It works with NFS, SMB, or both. If one of your applications requires Linux servers mounted to NFS and other Windows servers mounted to SMB, you can do that. It can live in AWS, Google Cloud (GC), or Azure.

The biggest difference between CVS and ANF is the architecture. With CVS, the actual NetApp product is going to live in a data center. This is not the case with AWS and GC, which reside in Equinix. The way Azure works with ANF is that the NetApp system actually lives in Azure's data center, a couple of racks down from your compute. There is no added latency or virtual gateway needed for you to get access from your production VNet into the NetApp VNet. It's a lot quicker, closer, and faster.

What can you do with ANF and why would you need it? How does it compare to CVS?

Essentially, you would want ANF for NFS or SMB protocols in the public cloud without the need for a server. With ANF, you don't have to stand up a server that runs on compute and memory and set up NFS or SMB shares. ANF can simplify the NFS/SMB public cloud infrastructure complexity—you just go to one spot and it's all there.You access it by giving something access to those IP addresses and then you're good to go. If you need higher performance than what AWS, EFS, or performance SSDs can offer inside of Azure, then it would definitely be effective. Creating and expanding volumes for growth is very simple. You just go in and shrink or expand the quota and it's all done. There's nothing else you have to do.

On-premises workloads can also be connected to Cloud Volumes. You will have higher latency, but it is possible. I've done it in my Equinix data center where I have accessed the shares from ANF. You are able to do that as long as you're in a data center and you have a direct connection.

However, ANF does not support SnapMirror and I don’t know if it ever will, which is where CloudSync comes into play. CloudSync will be limited by your bandwidth. Like a robocopy or any other copy RMAN tool, if you change a 1GB file, or one block gets changed, then the entire 1GB file has to be resynced. It's not as efficient as SnapMirror.

If you're doing a lot of little changes on large files, CVS and CloudSync may not be the option for you. If you're coming back on premises, you are going to be charged for egress files and transfers, which isn’t cost-effective. You will have to really think about how you're utilizing it, and then how you're going to protect your data.

What I learned from working with ANF

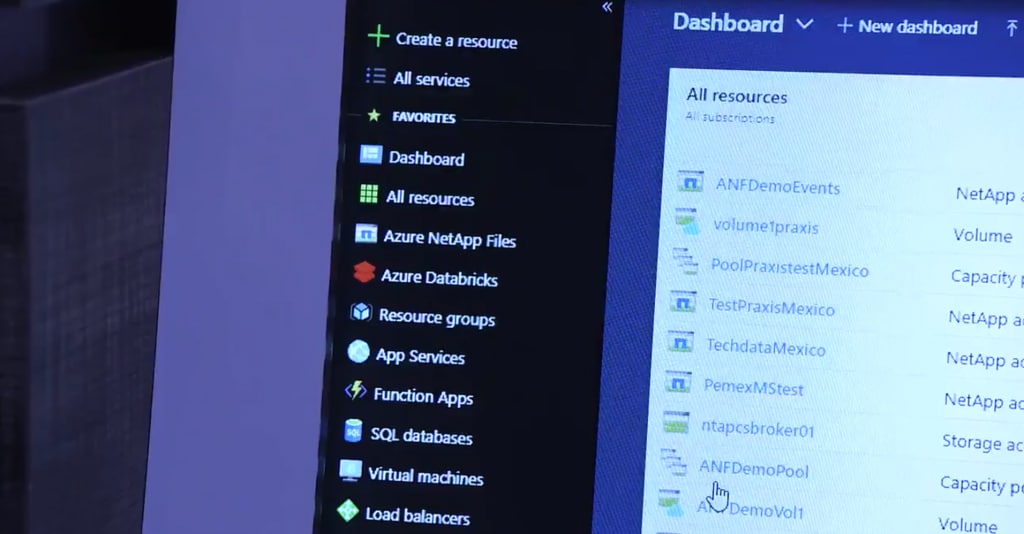

I was white-listed for ANF, which was extremely cool. NetApp gave me a lab environment and asked me to present this stuff to clients. However, there were a few bugs. Because they use physical SVMs, there were some issues at first with cleanup work, but NetApp was able to get those resolved. Because it is physical gear, standing up physical LIFs and IP addresses was a problem. Once I deleted an ANF instance and created a new one with the same IP addresses, I realized that it didn't clean up the metadata. I couldn't reuse the IP addresses because Azure said it was overlapping with an existing IP address, which no longer existed.Once I got past those small problems, the system was great. I was able to stand up storage pools, create my quotas, and attach them to Linux boxes and Windows servers. Everything ran well right up until the point where I used the same virtual machine that did my load testing for SQL. When I ran it against ANF I found I was getting 10 minutes latency response times, which is incredibly slow.

I was thinking, well ANF is supposed to be this new awesome product where the storage array is sitting in the same vicinity as the compute. Why is everything running so slow? When I went back to the ANF team, they suggested that I enable accelerated networking on whichever virtual machine I'm attaching to ANF with.

By default, when you create a virtual machine inside Azure, it only has standard networking. Accelerated networking is not in play, so I decided to move forward with the accelerated NICs (network interface cards). You just need to create a new NIC with accelerated networking enabled, attach that to the virtual machine, and then get rid of the old NIC. However, you do need to enable accelerated networking when you create it.

Once I had the new NIC created and attached to the virtual machine with accelerated networking, I re-ran all my tests and found that it had become incredibly fast. In my opinion, when this gets rolled out, we are going to have to ensure that whatever workload is attached should have accelerated networking stood up on the server or VM.

As to pricing, I didn't look at my bill and I had to see what was going on there. The way that CVO is priced is that you have a running VM and you have all of these managed disks in the back. Even though I wasn't really using it, I accidentally left the CVO HA instance on. It's currently costing me about $800 a month just for the 500GB CVO HA instance to be running with no data on it.

By contrast, my ANF bill was $0. The nice thing is there's nothing to start, nothing to stop. I have an ANF instance, but since I don't have any data on it anymore, NetApp is not going to charge me. I get charged for what I use and nothing more. There's no compute or HA that I have to worry about because it’s all built into Azure. I think leading with an ANF solution, if you can, is the way to go, as it will make billing easier.

ANF is stupidly fast with amazing quotas. When I was doing my ANF test, I wanted to test the actual throughput and latency. When you create an ANF instance, the smallest you can go is a 4TB storage pool. I can create a storage pool and then from there I can create my quotas. I created my volume of 150GB, which is the same drive size I used for my other tests. I used 150GB for all the Azure disks, all of the CVO disks, and the ANF disk, then I ran my test on the 150GB quota flash volume on ANF. Performance was phenomenal.

Afterward, I increased the quota to 1TB to test what that did for my performance. Immediately it went up. There was no delay. I just went into Azure, extended it to a 1TB quota, re-ran my storage test, and it doubled. It's just phenomenal. It's instant. If you need something today, increase your quota, and you'll get the speeds and throughput that you need. If you don't need it tomorrow, you can decrease the quota and you’re back to where you started. It's very flexible.

Azure NetApp Files is going to be an excellent answer for the people with cloud environments who say they can't do tier one applications because the storage just isn't there. They need more performance, and this is a solution for them.

Ryan Beaty

Ryan is a leading IT expert and NCIE-certified NetApp Systems Engineer, with extensive knowledge in NetApp, Azure, VMware, Cisco, and enterprise technologies. He has even earned a coveted spot on the NetApp A-Team as a NetApp Advocate. Ryan has engineered and administered systems and cloud networks since 2005 and currently holds certificates in many relevant technologies. Ryan’s primary role at Red8 as a Sr. Systems Engineer is to architect and implement sophisticated technology solutions for customers, including cloud. Along with understanding how the technology works, and what the limitations are, his personal goal is to provide the highest quality available, and to paint a clear picture of exactly what the end goal will look like before the implementation begins.